The rise of artificial intelligence (AI) has transformed the way businesses communicate, enabling unprecedented levels of efficiency and personalization.

However, this technological evolution also comes with new security risks—particularly the growing threat of AI-generated fraud.

As companies increasingly incorporate AI-driven tools into their operations, it’s vital to recognize and mitigate these emerging dangers.

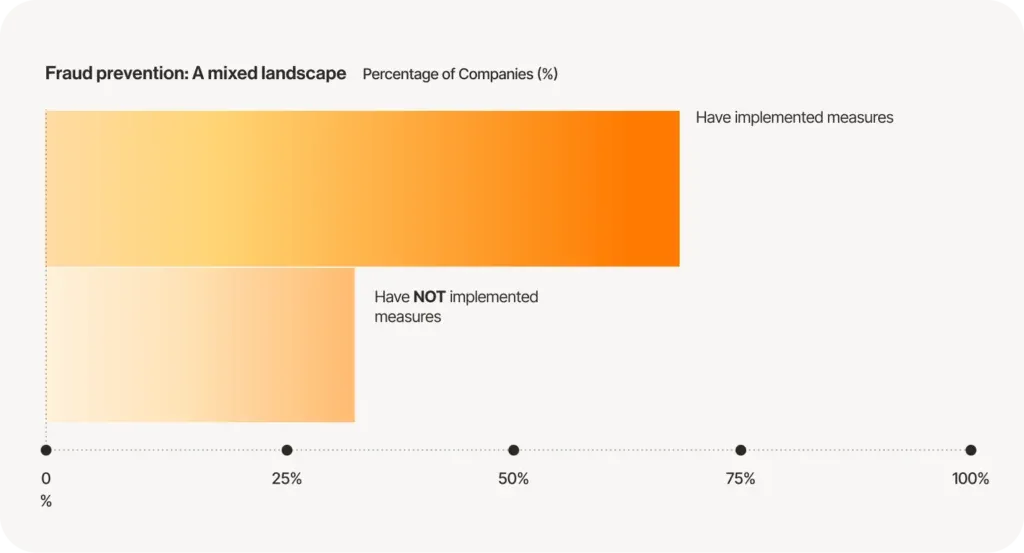

AI-powered fraud presents a significant challenge across industries. A recent survey conducted by RingCentral revealed that 72% of respondents fear their organization could be targeted by AI-generated voice or video fraud within the next year.

How AI-Generated Fraud Works

One of the most alarming aspects of AI fraud is the use of deepfake technology—highly realistic, AI-manipulated audio and video content that can be used to impersonate individuals or spread false information.

For example, fraudsters may use AI-generated voice recordings to impersonate executives and deceive employees into revealing confidential data or approving unauthorized transactions.

While AI-driven chatbots and virtual assistants enhance customer service, they can also be exploited by cybercriminals.

Malicious actors may use advanced AI models to craft highly convincing phishing messages or conduct large-scale automated social engineering attacks.

Despite these risks, many individuals remain overly confident in their ability to detect AI-generated fraud. The survey found that 86% of respondents believe they can distinguish between real and AI-generated voice or video content. However, as AI technology advances, this confidence may be misplaced, potentially leaving businesses exposed to increasingly sophisticated threats.

Small businesses, in particular, face heightened vulnerability to AI fraud. The survey indicated that respondents from companies with fewer than 20 employees were the least confident in their ability to identify AI-generated fraud, with only 62.75% expressing confidence. This disparity underscores the need for targeted support and education, particularly for smaller organizations that may lack the necessary security resources.

Strengthening Security Against AI Fraud

To combat these evolving threats, businesses must take a proactive approach to AI security. This includes:

-

Deploying advanced fraud detection technologies capable of identifying AI-generated content.

-

Establishing comprehensive security protocols to mitigate risks.

-

Providing regular training to employees to enhance awareness of AI-driven fraud tactics.

-

Staying informed about the latest developments in AI fraud techniques and adjusting security measures accordingly.

By understanding the risks associated with AI-generated fraud and taking proactive measures, organizations can leverage AI’s benefits while safeguarding their operations and maintaining trust with customers and partners.

What steps is your organization taking to combat AI-generated fraud? How confident are you in detecting and preventing these sophisticated attacks?